Can we trust AI in the classroom?

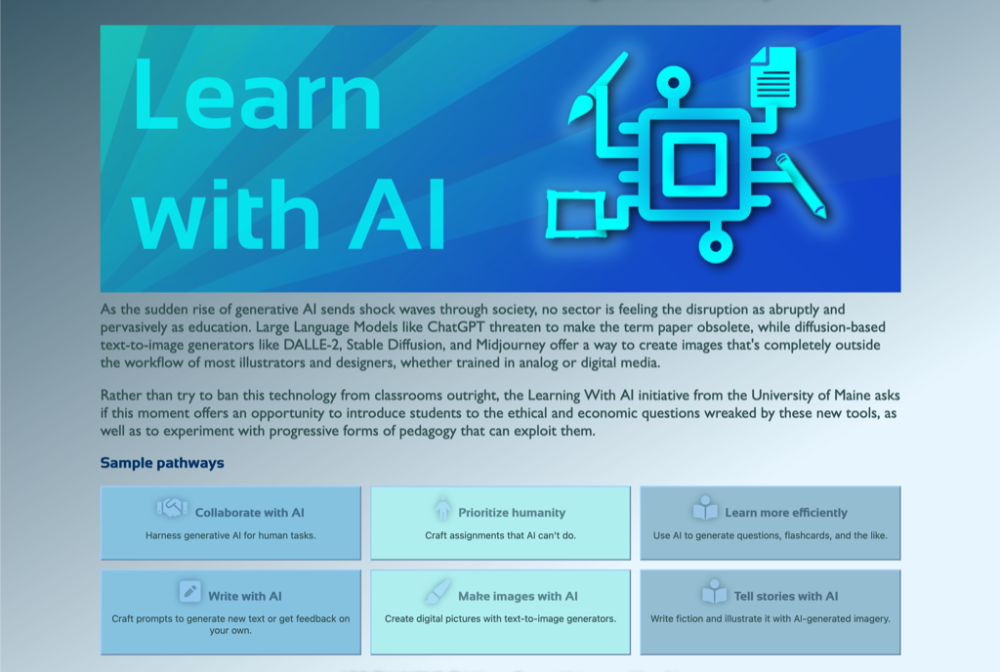

New Media’s Learning With AI partnership with Computer Science and other campus units continues to explore new creative and ethical questions posed by the rapid rise of AI. Its recommendations include a framework for deciding when it’s OK to use ChatGPT and a novel approach to assigning a term paper.

The expanding Learning With AI toolkit now documents 300 learning strategies and resources, together with a tag-based interface that helps educators and students quickly jump to intersections of topics such as “history + assignment” or “syllabus + plagiarism.”

The expanding Learning With AI toolkit now documents 300 learning strategies and resources, together with a tag-based interface that helps educators and students quickly jump to intersections of topics such as “history + assignment” or “syllabus + plagiarism.”

Among the educational discussions and workshops sparked by this initiative is a joint talk by New Media alumnus John Bell and professor Jon Ippolito at USC’s Future of Writing symposium. Bell and Ippolito explored the ramifications of a potential shift in writing focus from human to machine-readable communication due to innovations in large language models.

In a separate webinar for the Institute of Electrical and Electronics Engineers (IEEE) and UMaine AI, Ippolito proposed an inverse relationship between the reliability and creativity of generative AI. Asserting that its creative aspects compromise its trustworthiness, he emphasized that generative AI excels in opportunistic tasks, offering serendipitous solutions due to its probabilistic nature, unlike prescriptive tasks which demand precision. So a teacher might lean on ChatGPT for impromptu feedback on a student proposal, but avoid it for a binding commitment like grading a final project.

In a separate webinar for the Institute of Electrical and Electronics Engineers (IEEE) and UMaine AI, Ippolito proposed an inverse relationship between the reliability and creativity of generative AI. Asserting that its creative aspects compromise its trustworthiness, he emphasized that generative AI excels in opportunistic tasks, offering serendipitous solutions due to its probabilistic nature, unlike prescriptive tasks which demand precision. So a teacher might lean on ChatGPT for impromptu feedback on a student proposal, but avoid it for a binding commitment like grading a final project.

The first in a new series of UMaine Salons asked faculty and students to weigh in on the potential for a more engaged university. New Media’s Joline Blais emphasized the need for addressing climate change and community connection in higher education. In the second, Ippolito and Peter Schilling of the Center for Innovation in Teaching and Learning proposed ways for faculty to incorporate generative AI in their courses.

The first in a new series of UMaine Salons asked faculty and students to weigh in on the potential for a more engaged university. New Media’s Joline Blais emphasized the need for addressing climate change and community connection in higher education. In the second, Ippolito and Peter Schilling of the Center for Innovation in Teaching and Learning proposed ways for faculty to incorporate generative AI in their courses.

One such approach is the AI Sandwich, a paradigm that hopes to rescuscitate the concept of a term paper for the ChatGPT age. According to this model, a writer leans on a chatbot at the start to brainstorm research questions, and again at the end to write a draft from rough notes. Sandwiched between these two slices is a stage where the author gathers field research only a human can accomplish.

Examples of knowledge humans can produce that large language models can’t are physical items discovered in archives, digital records in online databases inaccessible to Google, and oral histories or local news gathered in their home towns. Ippolito argues that such research puts students in charge of producing knowledge, and may even reconnect them and the universities they attend to real-world communities.

New Media and Digital Curation faculty also led a workshop for Eastern Maine Community College and the Maine Mathematics and Science Alliance, while Ippolito was interviewed for an Inside Higher Ed story on who should get credit for generative AI’s mashup of texts and images by human creators. For more details on these developments, see The future of writing and Julius Caesar’s last breath.