Research computing services provide access to nationally-recognized computing and data solutions for the research community through collaboration with premier computing centers. ARCSIM is part of Coordinated Operating Research Entities (CORE) and overseen by the Office of the Vice President for Research and the Dean of the Graduate School, providing assurance of UG Service Center compliance. Refer to the Office of Research Administration for more information about compliance considerations in developing proposals and executing research computing activities.

Free HPC and Cloud technical documentation is available in the ARCSIM Guides wiki. Please note our HPC acceptable use policy.

Our services include technical support and are available at nationally competitive rates. University of Maine System faculty and researchers can leverage negotiated agreements and competitive rate structures by contacting ARCSIM today.

Services Offered

- Rate structures compliant with federal guidelines and UMS APLs for CPU and GPU computing

- Streamlined onboarding process

- Educational opportunities (hands-on workshops, webinars)

- Budget monitoring and web-based tools

- Grant budget requests

- Individualized help documentation

- Recommendations on resources to enhance your research

ARCSIM supports several different data storage solutions including cloud-based resources, and cluster-based backup options. Our storage services offer a competitively priced UG-compliant alternative to storage on local platforms. ARCSIM provides technical assistance for preferred partners listed below, but can also provide guidance for other platforms that best meet your research needs.

*UMS:IT offers free general purpose storage options to all students, staff, and faculty campus-wide (i.e., Google Drive, Microsoft OneDrive). On their own, these options are not intended as long-term archival storage and the risk of data loss is higher without a backup strategy.

Preferred Backup Storage Solutions

OSC project storage is available for all data backup needs, and can be combined with computing resources. No ingress/egress fees. Protected Data Service for controlled data available. Cost: [$8/TB/month for UMS researchers]

Wasabi S3 storage is an ARCSIM preferred vendor for cloud-based data storage needs; pay-as-you-go service. Cost: [$6.99/TB/month]

If you are interested in other cloud-service providers, please reach out for more information. Different tiers of storage available (incurs transfer fees).

Training and Workshop Events

ARCSIM offers a number of education opportunities from in-person training sessions, hosting online workshops, self-paced tutorials, and presentations on a variety of topics. Staff can also offer customized training for your group or department. Our existing training can be tailored to specific needs or new offerings can be developed. For more information about upcoming events offered by ARCSIM, please visit our News Page.

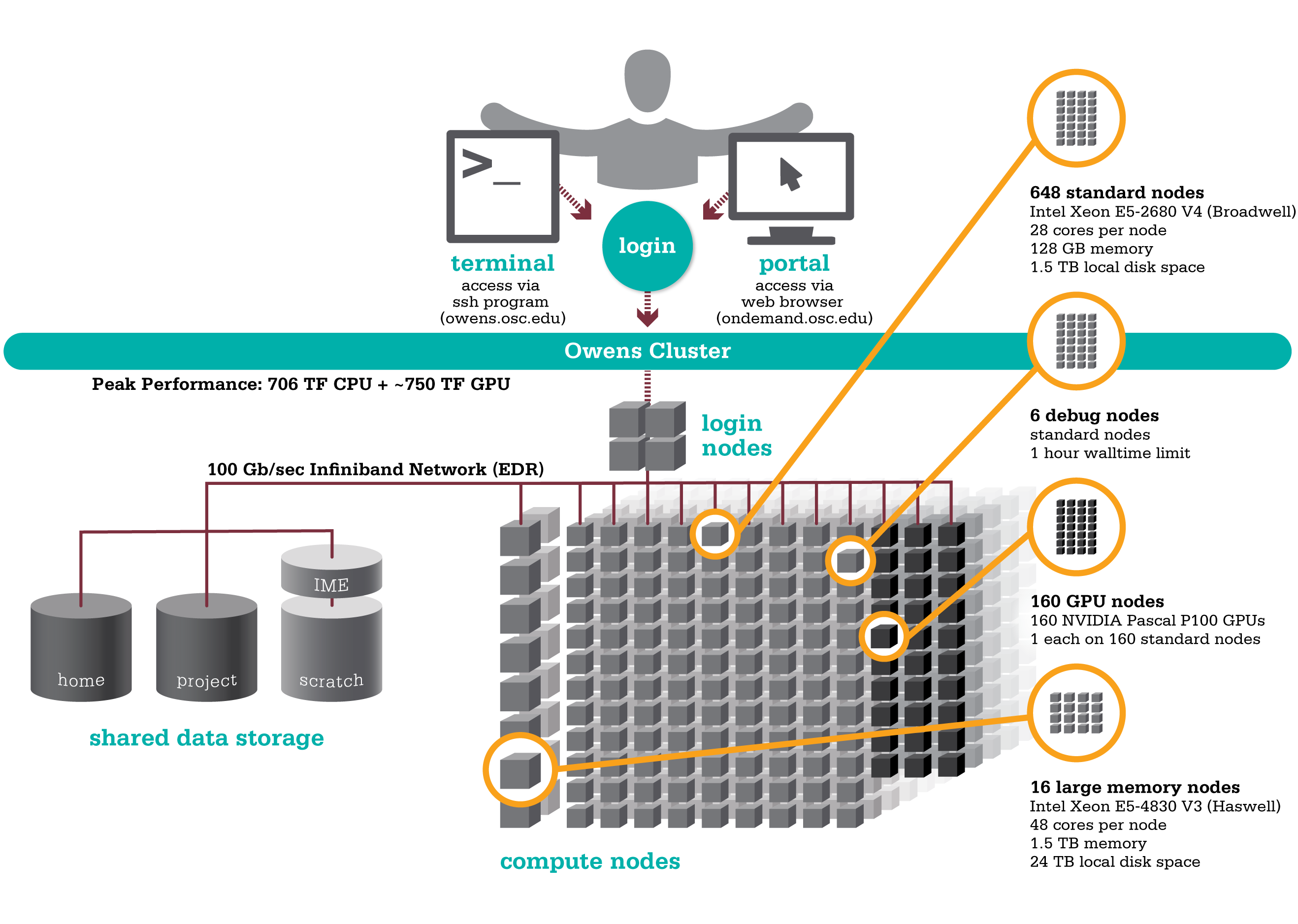

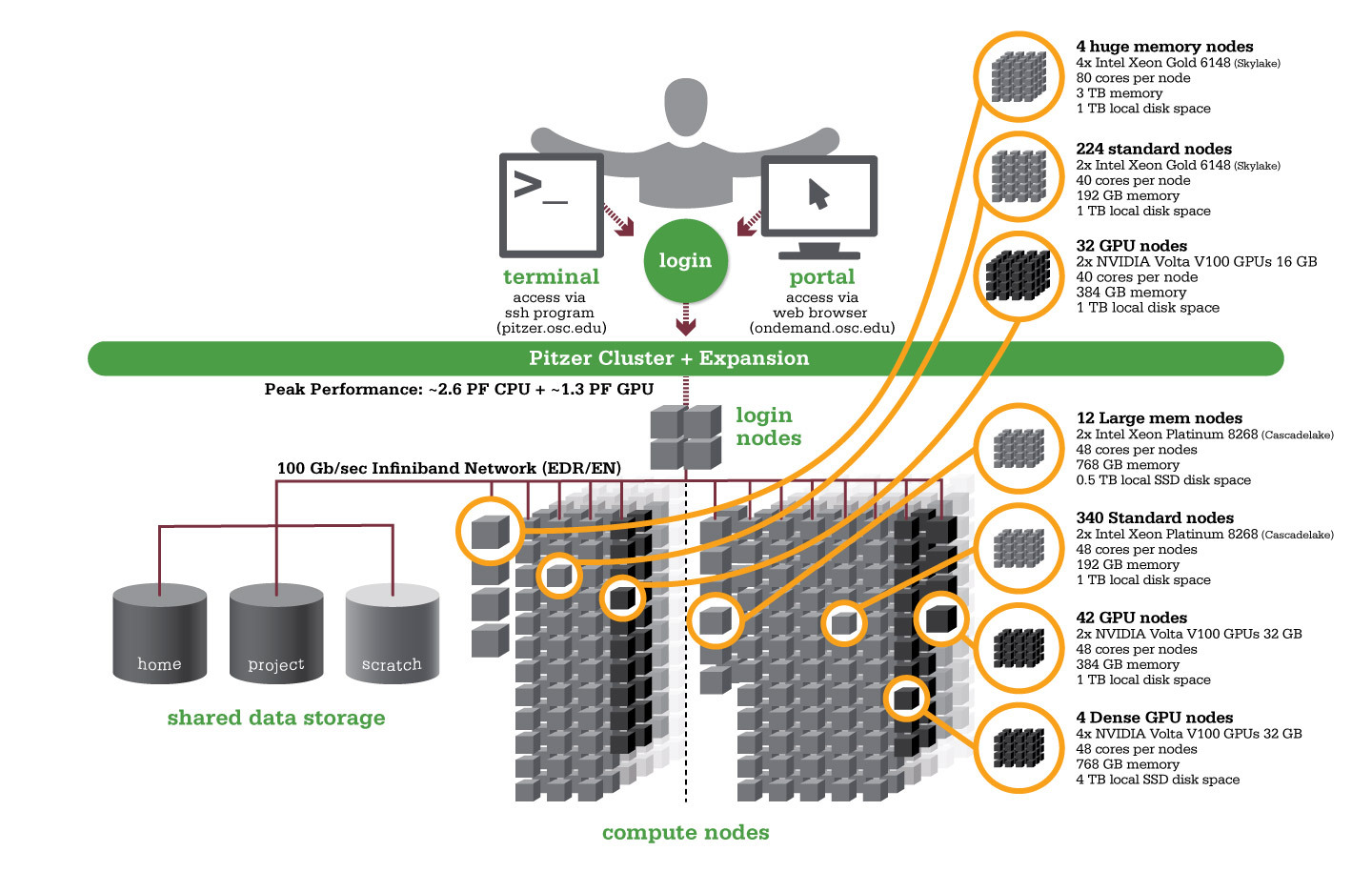

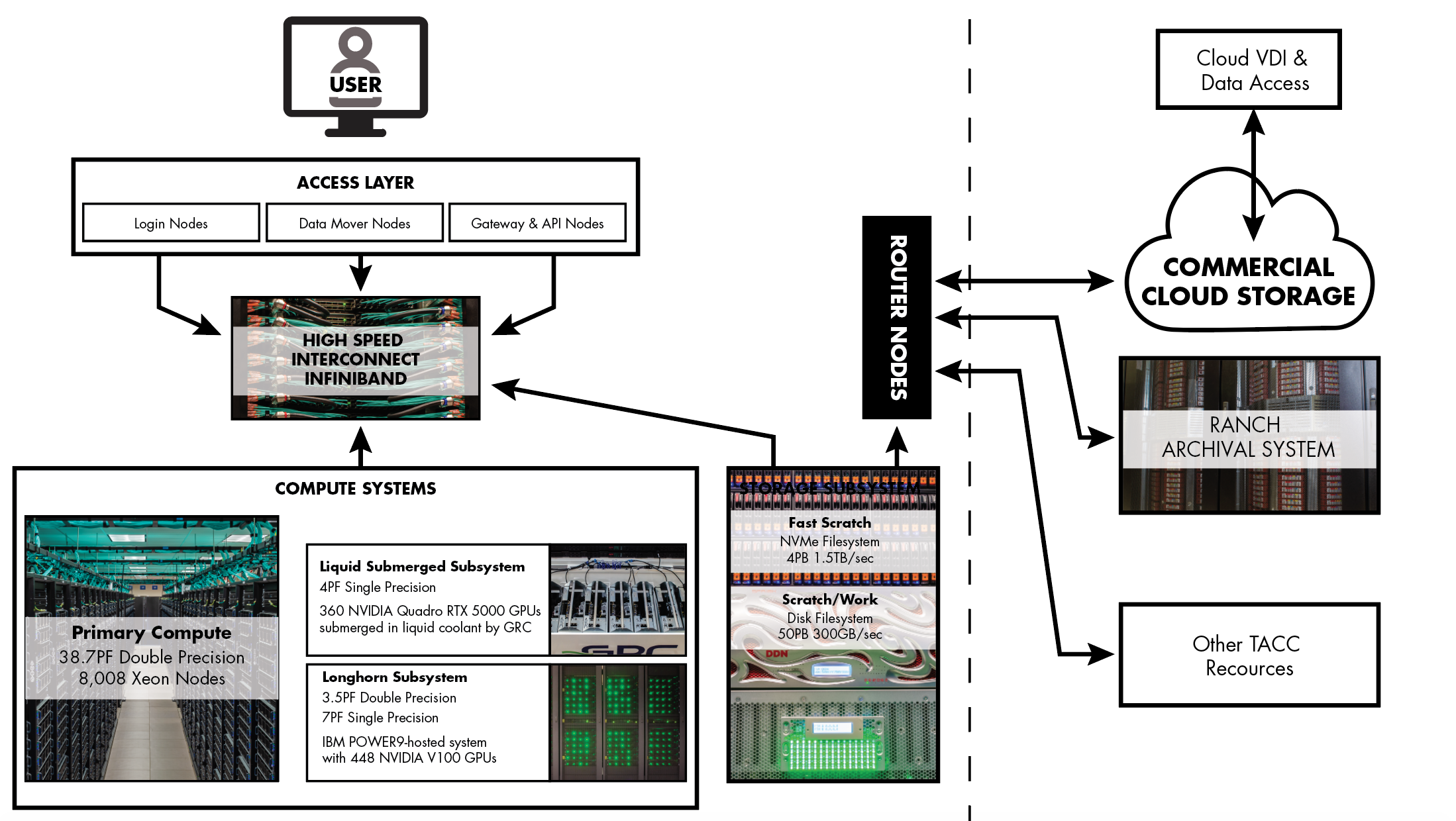

As an Ohio Supercomputer Center (OSC) Campus Champion member, ARCSIM has previously partnered with OSC staff to help support research and education through collaborative webinars and training opportunities. In addition, UMS researchers can attend any of the free virtual training events hosted by OSC on various HPC, programming, and analysis topics, both for beginners and advanced users. Some of the topics presented in the past include RNA-Sequencing Analysis, Linear Regression with R, Parallel Computing with MATLAB, Introduction to Python Environments, and Parallel R.

Upcoming OSC training events can be found on the OSC Events Page

Previous Training Opportunities

As per the requirements of granting agencies such as NSF and NIH, research projects must meet specific requirements for data management. For example, NSF proposal data management plans must include: data formats/standards, data sharing and access, reuse and distribution policies, and archiving. ARCSIM provides services to help researchers easily create an individualized data management plan for their grants to meet NSF and NIH requirements.

ARCSIM and the University of Maine System have partnered with DMPTool to provide an easy-access tool to assist in Data Management Plan creation for faculty and researchers in the University of Maine System. The operating motto of the project is “Guidance and resources for your data management plan.”

The Maine Dataverse Network (MDVN) is a cloud-based data repository intended to act as a long-term data archive to facilitate data sharing among the research community in accordance with NSF, NIH, NASA, and other granting authority data management plan requirements. It offers a convenient and secure method of sharing and archiving data and is made available to the Maine research community at no cost.

ARCSIM FY25 Rates

Effective February 1, 2025

Definitions used for calculating rates: 1 month = 730 hours and 1 TB = 1000 GB

| Service | Description | Billing Unit | Rate per Unit |

| R. Flagg: Project Coordination, Development, Training | Rate for advanced technical support, consulting services or custom development | Hour | $40.00 |

| C. Dalton: Project Coordination, Development, Training | Rate for advanced technical support, consulting services or custom development | Hour | $40.00 |

| 1,000 CPU Core Hours | Billed per single CPU core utilized for one hour at $0.005 per CPU core hour. | Unit | $5.00 |

| Tier 1 GPU Hour | One complete A100 GPU utilized for one hour | Hour | $0.07 |

| Tier 2 GPU Hour | One complete L40/A30/T4 GPU utilized for one hour | Hour | $0.05 |

| Tier 3 GPU Hour | One complete RTX2080 GPU utilized for one hour | Hour | $0.03 |

| Data Storage | Home directory storage (billed in 10 GB increments; based on highest usage amount within period) | TB per Month | $8.00 |

| Virtual Machine | Dedicated node independent from other services. Billed based on hours in an active state within period. Storage billed separately (minimum 80 GB allocation) | vCPU per Month | $2.50 |

| Service | Description | Billing Unit | Rate per Unit |

| R. Flagg: Project Coordination, Development, Training | Rate for advanced technical support, consulting services or custom development | Hour | $80.00 |

| C. Dalton: Project Coordination, Development, Training | Rate for advanced technical support, consulting services or custom development | Hour | $80.00 |

| 1,000 CPU Core Hours | Billed per single CPU core utilized for one hour at $0.01 per CPU core hour. | Unit | $10.00 |

| Tier 1 GPU Hour | One complete A100 GPU utilized for one hour | Hour | $0.14 |

| Tier 2 GPU Hour | One complete L40/A30/T4 GPU utilized for one hour | Hour | $0.10 |

| Tier 3 GPU Hour | One complete RTX2080 GPU utilized for one hour | Hour | $0.06 |

| Data Storage | Home directory storage (billed in 10 GB increments; based on highest usage amount within period) | TB per Month | $10.00 |

| Virtual Machine | Dedicated node independent from other services. Billed based on hours in an active state within period. Storage billed separately (minimum 80 GB allocation) | vCPU per Month | $3.00 |

| Service | Description | Billing Unit | Rate per Unit |

| R. Flagg: Project Coordination, Development, Training | Rate for advanced technical support, consulting services or custom development | Hour | $200.00 |

| C. Dalton: Project Coordination, Development, Training | Rate for advanced technical support, consulting services or custom development | Hour | $200.00 |

| 1,000 CPU Core Hours | Billed per single CPU core utilized for one hour at $0.02 per CPU core hour. | Unit | $20.00 |

| Tier 1 GPU Hour | One complete A100 GPU utilized for one hour | Hour | $1.50 |

| Tier 2 GPU Hour | One complete L40/A30/T4 GPU utilized for one hour | Hour | $1.00 |

| Tier 3 GPU Hour | One complete RTX2080 GPU utilized for one hour | Hour | $0.50 |

| Data Storage | Home directory storage (billed in 10 GB increments; based on highest usage amount within period) | TB per Month | $40.00 |

| Virtual Machine | Dedicated node independent from other services. Billed based on hours in an active state within period. Storage billed separately (minimum 80 GB allocation) | vCPU per Month | $12.50 |

Items to Note:

Pre-payments are not allowed under any circumstances. All payments must be for services rendered. Services purchased with sponsored funds must be directly allocable to the funding project.

CORE research resources are subsidized by the Office of the Vice President for Research and Dean of the Graduate School (OVPRDGS) through the use of MEIF institutional funds, to advance research and economic development for the benefit of all Maine people.

Updated 1/13/2025

Acknowledge Scientific Computing

Scientists who publish results of research that was performed using ARCSIM resources and capabilities should include an acknowledgement in any publications that result from that work. If significant contributions are made to the results of the work(s), authorship should also be considered. This includes journal articles, presentations, reports, proceedings and book chapters. Please use the text:

“This work was supported in part through the computational resources and staff expertise provided by Advanced Research Computing, Security, and Information Management (ARCSIM) at the University of Maine at Orono.”